New Course: Testing Progressive Web Apps on Pluralsight

Today my new course Testing Progressive Web Apps has been published and I'm pretty excited for it (like usual!).

What I Learned

I learned quite a bit while developing this course because in order to test a PWA, you need to have a PWA in the first place!

For the course I developed the Carved Rock Fitness Order Tracker which is an open source sample application for demo purposes.

The is a fully-developed PWA which features:

- Offline support

- Service worker caching

- Local notifications

- Responsive design

- Cypress and WebDriverIO tests

- Local and BrowserStack-powered tests

The course uses this app as the basis of walking through how to handle all the various scenarios you'd encounter when testing a PWA like how to bypass service worker caching, notification permissions, and more.

Since Cypress didn't offer a built-in way to control browser permissions in a standard way, I also released the cypress-browser-permissions which lets you manage browser permissions in a standard way for Chromium (Google Chrome, MS Edge) and Firefox.

The sample was built using Ionic Framework and it was my first time using the framework to build something (there's no better way to learn, right?). I was extremely impressed with how easy it was to build using the framework and all the features provided out-of-the-box. I think there's even more that could be done using Capacitor, which I'm not currently using, to provide abstractions over some of the native APIs like notifications, geolocation, etc.

In the course I use Netlify to host deployment previews and for GitHub Actions I forked an existing action to make it work a bit better using the Netlify API to "wait" until a deployment preview commit was up and running before running tests against it.

How Much Work Was It?

This was the first time I used Clockify in earnest to track the various aspects of the course production. This meant I could track each checklist item (which is a bit too granular) but using tags I can group activities like content creation, coding, recording, and editing together.

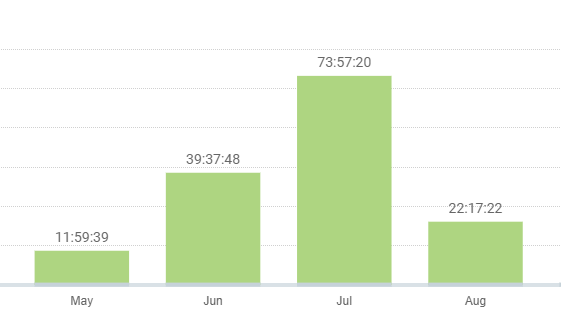

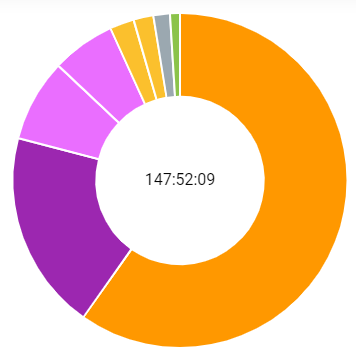

In total, I spent about 150 hours give or take (I didn't track assessment question quite as much) with a monthly breakdown like this:

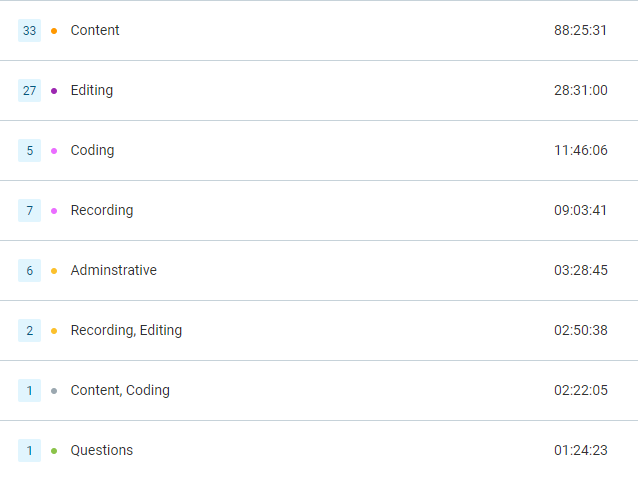

More interesting perhaps is the breakdown of time spent on different aspects of course development:

Most of the time spent developing a course is in content creation (88 hours) and research followed by editing (28 hours). Interestingly, to develop the sample app was less than 15 hours of work although time spent tweaking and polishing throughout course development is also wrapped up in "Content".

This insight is good to have because it allows me to judge whether I should leverage some of Pluralsight's packaged services to do editing in the future. For example, if I spend 30 hours editing a 90 minute course and I am paid $X, I can do the math to determine whether hiring out editing is worth it.

As far as content goes for this course, a lot of time was spent researching since testing PWAs using Cypress and WebDriverIO is not a common thing people tend to do it seems (and now, maybe my course will demystify a lot of it). The 88 hours of content creation includes lots of other things like writing tests, researching bugs, waiting for CI runs, etc. While writing tests with Ionic, I discovered a couple of Cypress bugs along the way which have all been fixed now as of the course publishing which is awesome!

Relative to my previous courses, this one took about twice as long and I attribute it to that additional research and test implementationonic which was the first time I actually used it to build something. It was twice as much as I anticipated but I think it worked out!

What Would I Have Done Differently?

I think that this course is fairly fast-paced. I don't stop much to ponder the implementation or explain details when going over the tests. For someone who just needs to know what goes into writing a test for a feature, I think it'll be a good pace. For someone else who wants to know why, the sample app is there for reference.

If this course were produced solely by myself I think I'd split it up or make it longer, in order to explain how I built the sample or explain the details of why a test was written a certain way. However, the target length of a course is around 90 minutes and it was hard enough to edit down to meet that (it's 100 minutes).

If I have a chance to update the course for a next edition (which is likely as Cypress already has released a new major version since I published) I think I'd show the app side-by-side the test code which is something I did in the final module. I think this really made it super clear what we were testing -- and it worked well in that case because it was a BrowserStack device test. Originally, I had planned to have the Cypress test runner showing on the screen side-by-side but the way the test runner works didn't allow for that very effectively (with watch + reload and not a lot of room). However, recording the manual steps in BrowserStack and overlaying it on top may work in the future for the other test modules.

Conclusion

Overall I'm really happy with how the course turned out! I always have to remember that perfect is the enemy of good. That's one reason why I like doing Pluralsight courses -- I sign a contract saying I'll get it done and it forces me to prioritize what matters instead of fiddling with stuff forever.

Check out the course if you have a subscription!